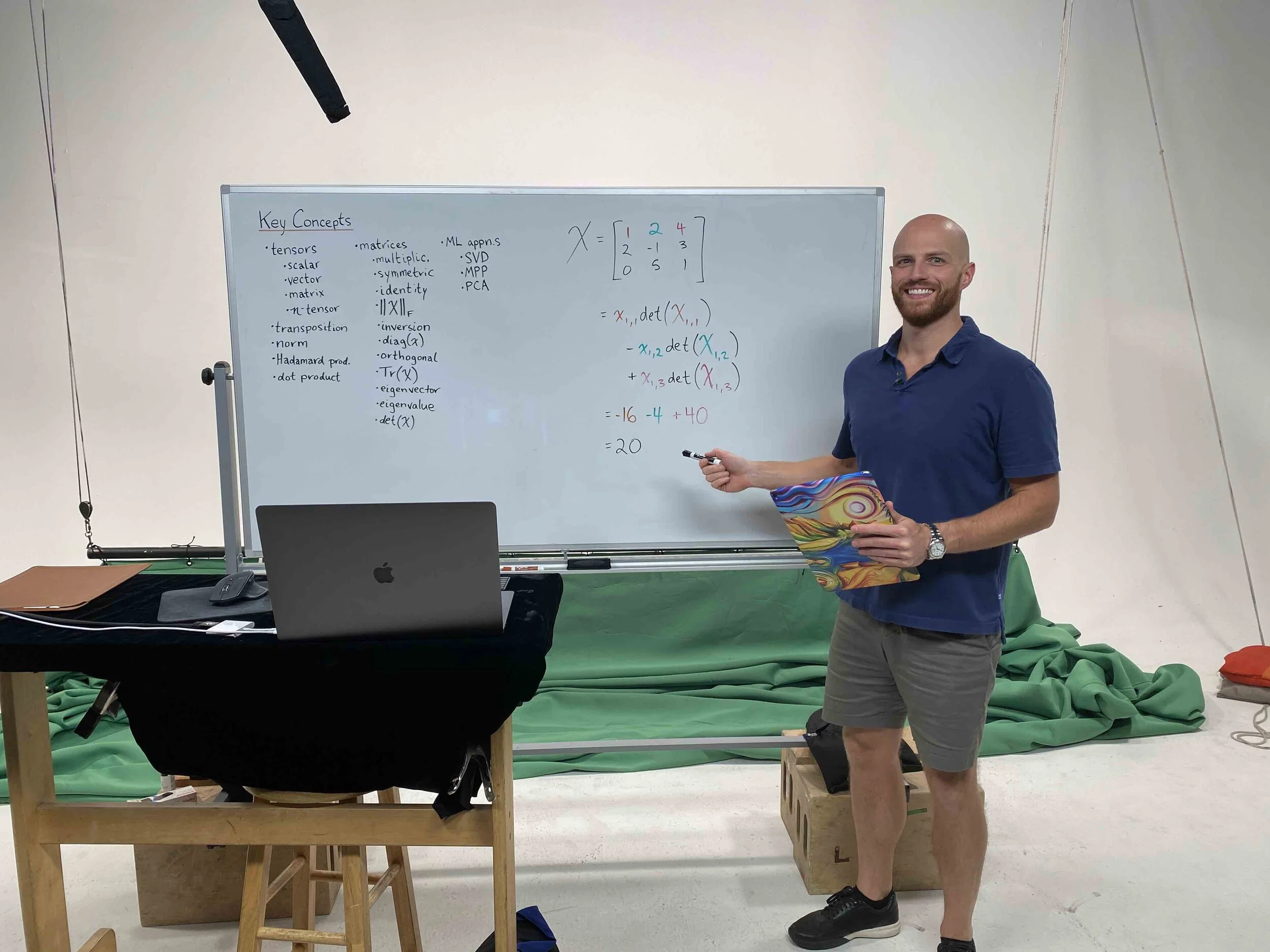

Happy new year! I'm delighted to announce that I've taken over the reigns as host of the SuperDataScience podcast as of the January 1st episode (#432).

In November, Kirill Eremenko blew me away by asking me if I'd be interested in hosting the program and of course I immediately said, "yes!"

By releasing two riveting episodes per week since 2016, Kirill has amassed an extraordinary global audience of 10,000 listeners per episode. I'm over the moon to have the opportunity to share cutting-edge content from the fields of data science, machine learning, and A.I. with so many engaged professionals and students.

Kirill left behind super-sized shoes to fill, but I'm committed to maintaining the lofty standard that he set. I'll also be maintaining the structure that preceded me:

Odd-numbered episodes feature guests and are released every Wednesday. I've already recorded fun episodes packed with practical insights from Ben Taylor is..., Erica Greene, and Claudia Perlich, with many more "household" data science names lined up.

Even-numbered episodes, like #432, are "Five-Minute Fridays". These are short, come out every Friday (duh), and focused on a specific item of data science or career advice.

With a challenging 2020 behind us, I hope you're as excited as I am to be starting 2021 off with something new.