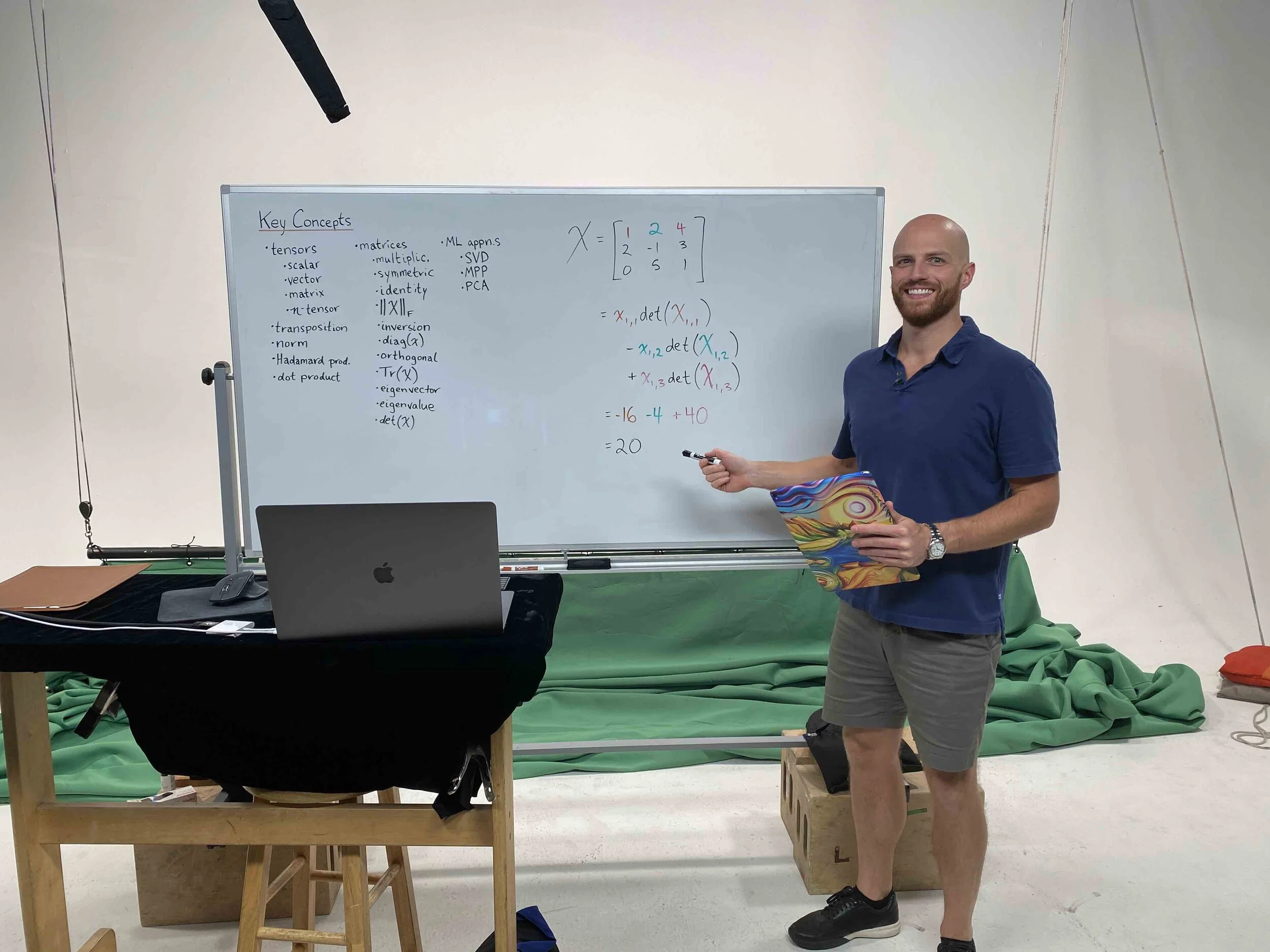

Same shirt, different day with my "weekend crew". We burnt the midnight oil Friday through Sunday last weekend filming what will be eight hours of interactive videos on Calculus for Machine Learning.

In the above photo, pictured from left to right at New York's Production Central Studios: myself, technician Guillaume Rousseau, and producer Erina Sanders.

All of the code (featuring the Python libraries NumPy, TensorFlow, and PyTorch) is available open-source in GitHub today.

The videos themselves will appear in the O'Reilly learning platform later this year, with thanks to Pearson's Debra Williams Cauley for bringing another project concept of mine to life.