Click through for a story from TechTarget on top data science tools. They appear to have erroneously misattributed a (perfectly reasonable) quote to me on R, but my quote about Jupyter Notebooks is the real deal.

untapt Hosts Its First Ever AI Think Tank Event In New York City →

On Tuesday afternoon, we (untapt) hosted a Think Tank on the use of algorithms for improving human-resources processes. Our audience was highly engaged and we were delighted that the average of their rankings of candidates for a given role aligned precisely with our algorithm's rankings.

In this press release issued yesterday, I commented:

Through the Think Tank's discussions and interactive exercises, we observed further evidence that both recruiters and candidates gain from the use of AI tools. The event demonstrated an inherent need for what we've built at untapt. As human-resources professionals increasingly appreciate that AI saves them time and capital while simultaneously improving the quality of the prospects they identify for open roles, we are witnessing a shift toward the technology's adoption. This fuels our passion to continue delivering solutions that make a difference.

Human Talent and Artificial Intelligence Collide in Advanced New Offering from untapt →

This press release from July 19th formally launched our (untapt's) AI product, an algorithm for matching candidates with roles at scale. It offers expert-human-recruiter accuracy but is millions of times faster.

In the piece, I was quoted as saying:

The complexity and nuance of problems in the talent space make them fascinating ones to tackle with models that incorporate automation. Through the application of the latest statistical computing approaches from academia, we've harnessed the might of our large, high-dimensional data to streamline human-resource processes with commensurate subtlety. I'm delighted that these models are now available to our clients, minimizing drudgery and freeing up their time to focus on creative, interpersonal work.

Gareth Moody, my colleague and dear friend, opined that my choice of language in the quote was not un-awkward and that my verbosity was excessive.

Want Less-Biased Decisions? Use Algorithms. →

Forthcoming research out of Columbia University indicates that, compared with human screeners, an algorithm that decides which job applicants should get interviews "exhibited significantly less bias against candidates that were underrepresented at the firm".

My 30-Hour Deep Learning Course: 30% Discount for Female Students →

Beginning July 21st for five weekends, I’ll be teaching my 30-hour course on deep learning in-classroom at the NYC Data Science Academy. This will be my third time teaching the course, which I developed based on the content of my forthcoming book.

While the first two iterations of the course — offered in late 2017 and Spring 2018 — were popular, only one out of every ten participants was a woman. In hopes of reaching gender parity, the Academy has whole-heartedly supported my suggestion that we offer a 30% discount to female students — their largest discount ever. To receive the sizeable reduction of nearly $1000, registrants are encouraged to use the code DLFORWOMEN upon checkout.

Deep Reinforcement Learning Experiments Run Simultaneously Across OpenAI and Unity Environments →

SLM Lab is an open-source tool created by Laura Graesser and Wah Loon Keng for training Deep Reinforcement Learning agents across multiple complex environments. At the most recent session of our Deep Learning Study Group, Keng and Laura provided us with an interactive demo of it.

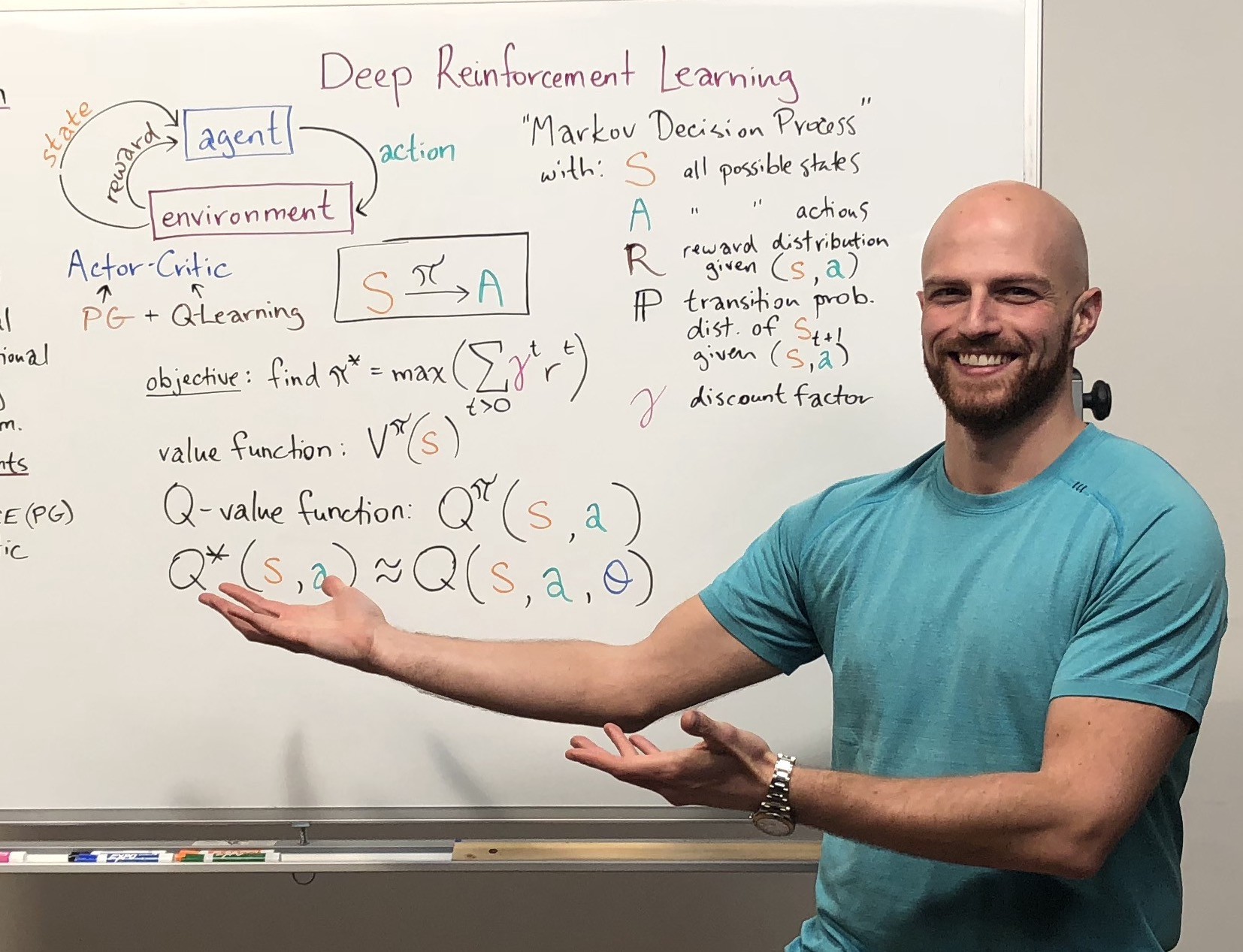

Deep Reinforcement Learning and Generative Adversarial Networks: Tutorials with Jupyter Notebooks →

Two families of Deep Learning architectures have yielded the lion’s share of recent years' “artificial intelligence” advances. Click through for this blog post, which introduces both families and summarises my newly-released interactive video tutorials on them.

Filming “Deep Reinforcement Learning and Generative Adversarial Network LiveLessons” →

Following on the back of my Deep Learning with TensorFlow and Deep Learning for Natural Language Processing tutorials, I recently recorded a new set of videos covering Deep Reinforcement Learning and Generative Adversarial Networks. These two subfields of deep learning are arguably the most rapidly-evolving so it was a thrill both to learn about these topics and to put the videos together.

“OpenAI Lab” for Deep Reinforcement Learning Experimentation →

With SantaCon’s blizzard-y carnage erupting around untapt’s Manhattan office, the members of the Deep Learning Study Group trekked through snow and crowds of eggnog-saturated merry-makers to continue our Deep Reinforcement Learning journey.

We were fortunate have this session be led by Laura Graesser and Wah Loon Keng. Laura and Keng are the human brains behind the OpenAI Lab Python library for automating experiments that involve Deep RL agents exploring virtual environments. Click through to read more.

"Deep Learning with TensorFlow" is a 2017 Community Favourite

My Deep Learning with TensorFlow tutorial made my publisher's list of their highest-rated content in 2017. To celebrate, the code "FAVE" gets you 60% off the videos until January 8th.

Deep Reinforcement Learning: Our Prescribed Study Path →

At the fifteenth session of our Deep Learning Study Group, we began coverage of Reinforcement Learning. This post introduces Deep Reinforcement Learning and discusses the resources we found most useful for starting from scratch in this space.

"Deep Learning for Natural Language Processing" Video Tutorials with Jupyter Notebooks →

Following on the heels of my acclaimed hands-on introduction to Deep Learning, my Natural Language Processing-specific videos lessons are now available in Safari. The accompanying Jupyter notebooks are available for free in GitHub.

Build Your Own Deep Learning Project via my 30-Hour Course →

My 30-hour Deep Learning course kicks off next Saturday, October 14th at the NYC Data Science Academy. Click through for details of the curriculum, a video interview, and specifics on how I'll lead students through the process of creating their own Deep Learning project, from conception all the way through to completion.

Deep Learning Course Demo

In February, I gave the above talk -- one of my favourites, on the Fundamentals of Deep Learning -- to the NYC Open Data Meetup. Tonight, following months of curriculum development, I'm providing an overview of the part-time Deep Learning course that I'm offering at the Meetup's affiliate, the NYC Data Science Academy, from October through December. The event is sold out, but a live stream will be available from 7pm Eastern Time (link through here).

“Deep Learning with TensorFlow” Introductory Tutorials with Jupyter Notebooks →

My hands-on introduction to Deep Learning is now available in Safari. The accompanying Jupyter notebooks are available for free in GitHub. Below is a free excerpt from the full series of lessons:

How to Understand How LSTMs Work →

In the twelfth iteration of our Deep Learning Study Group, we talked about a number of Deep Learning techniques pertaining to Natural Language Processing, including Attention, Convolutional Neural Networks, Tree Recursive Neural Networks, and Gated Recurrent Units. Our collective effort was primarily focused on a popular variant of the latter -- Long Short-Term Memory units. This blog post provides a high-level overview of what LSTMs are, why they're suddenly ubiquitous, and the resources we recommend working through to understand them.

Filming "Deep Learning with TensorFlow LiveLessons" for Safari Books →

We recently wrapped up filming my five-hour Deep Learning with TensorFlow LiveLessons. These hands-on, introductory tutorials are published by Pearson and will become available in the Safari Books online environment in August.

HackFemme 2017 →

On Friday, I had the humbling experience of contributing to a panel on becoming a champion of diversity at the Coalition for Queens' inaugural (and immaculately-run) HackFemme conference for women in software engineering. The consensus among the panelists (Sarah Manning, Etsy; Patricia Goodwin-Peters, Kate Spade) during the articulately-moderated hour (thanks to Valerie Biberaj) was that much can be accomplished by focusing on the present moment and, for example, speaking up for the softer-voiced contributors to a business discussion.

Deep Learning Study Group XI: Recurrent Neural Networks, including GRUs and LSTMs →

Last week, we welcomed Claudia Perlich and Brian Dalessandro as outstanding speakers at our study session, which focused on deep neural net approaches for handling natural language tasks like sentiment analysis or translation between languages.

Deep Learning Study Group 10: word2vec Mania + Generative Adversarial Networks →

In our tenth session, we delved into the theory and practice of converting human language into fodder for machines. Specifically, we discussed the mathematics and software application of word2vec, a popular approach for converting natural language into a high-dimensional vector space.